第十二章 K8S服务组件之kube-dns&Dashboard

最后更新于:2022-04-02 05:07:04

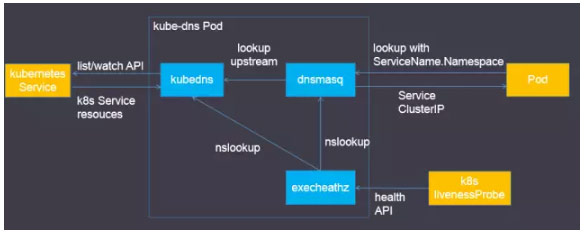

## 实现原理

- kubedns容器的功能:

- 接入SkyDNS,为dnsmasq提供查询服务

- 替换etcd容器,使用树形结构在内存中保存DNS记录

- 通过K8S API监视Service资源变化并更新DNS记录

- 服务10053端口

- 会检查两个容器的健康状态。

- dnsmasq容器的功能:

- Dnsmasq是一款小巧的DNS配置工具

- 在kube-dns插件中的作用

- 通过kubedns容器获取DNS规则,在集群中提供DNS查询服务

- 提供DNS缓存,提高查询性能

- 降低kubedns容器的压力、提高稳定性

- 在kube-dns插件的编排文件中可以看到,dnsmasq通过参数–server=127.0.0.1#10053指定upstream为kubedns。

- exec-healthz容器的功能:

- 在kube-dns插件中提供健康检查功能

- 会对两个容器都进行健康检查,更加完善。

#### 备注:kube-dns组件 [github 下载地址](https://github.com/kubernetes/kubernetes)

### 创建kubedns-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

### 对比 kubedns-dns-controller 配置文件修改

diff kubedns-controller.yaml.sed /root/kubernetes/k8s-deploy/mainifest/dns/kubedns-controller.yaml

58c58

< image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4

88c88

< - --domain=$DNS_DOMAIN.

---

> - --domain=cluster.local.

109c109

< image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4

128c128

< - --server=/$DNS_DOMAIN/127.0.0.1#10053

---

> - --server=/cluster.local/127.0.0.1#10053

147c147

< image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.5

---

> image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4

160,161c160,161

< - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.$DNS_DOMAIN,5,A

< - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.$DNS_DOMAIN,5,A

---

> - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

> - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

### 创建kubedns-dns-controller

# Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml

# in sync with this file.

# Warning: This is a file generated from the base underscore template file: kubedns-controller.yaml.base

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

containers:

- name: kubedns

image: gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local.

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default # Don't use cluster DNS.

serviceAccountName: kube-dns

### 创建 kubedns-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

### 创建 kubedns-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

## 部署dns-horizontal-autoscaler

创建 dns-horizontal-autoscaler-rbac.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

- apiGroups: [""]

resources: ["replicationcontrollers/scale"]

verbs: ["get", "update"]

- apiGroups: ["extensions"]

resources: ["deployments/scale", "replicasets/scale"]

verbs: ["get", "update"]

# Remove the configmaps rule once below issue is fixed:

# kubernetes-incubator/cluster-proportional-autoscaler#16

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: system:kube-dns-autoscaler

labels:

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: kube-dns-autoscaler

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:kube-dns-autoscaler

apiGroup: rbac.authorization.k8s.io

创建 dns-horizontal-autoscaler.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns-autoscaler

namespace: kube-system

labels:

k8s-app: kube-dns-autoscaler

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

template:

metadata:

labels:

k8s-app: kube-dns-autoscaler

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

containers:

- name: autoscaler

image: gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.1.2-r2

resources:

requests:

cpu: "20m"

memory: "10Mi"

command:

- /cluster-proportional-autoscaler

- --namespace=kube-system

- --configmap=kube-dns-autoscaler

# Should keep target in sync with cluster/addons/dns/kubedns-controller.yaml.base

- --target=Deployment/kube-dns

# When cluster is using large nodes(with more cores), "coresPerReplica" should dominate.

# If using small nodes, "nodesPerReplica" should dominate.

- --default-params={"linear":{"coresPerReplica":256,"nodesPerReplica":16,"preventSinglePointFailure":true}}

- --logtostderr=true

- --v=2

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

serviceAccountName: kube-dns-autoscaler

# 创建 dashboard-rbac

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

### 创建 dashboard-controller

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

containers:

- name: kubernetes-dashboard

image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.6.1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://172.16.200.100:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

### 创建 dashboard-service

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

';